Are We Agentic?

(or: TEEs as self-help therapy)

On self-attention

Late last year, I began to notice myself and everyone else analogizing experience in terms of various AI model behaviors and architectures. Everyone from Jensen Huang to the PE guy sneaking Claude API calls on his Microsoft Office remote desktop setup has recognized that prompting is the most advanced form of interfacing not just with models, but with other people as well. Andrej Karpathy compares the way DeepSeek learns to an evolved child. And over the holidays I spent about 15 minutes trying to understand the numbering scheme on a protein bar advent calendar I got for Christmas (numbers on the calendar didn’t increase consecutively) before realizing that this was the exact same kind of shape-rotating reasoning test that o3 and other ARC-tested models would excel at (the answer: the calendar contained multiple varieties, and the lack of consecutive numbers was meant to guide us protein-maxers through a maze of delightful flavors).

It’s the human-to-machine calque, the same way we say superman in English and ubermensch in German (or copy in English and calque in French), or the same way English to German and French translation was also the benchmark used as a basis for Google’s Attention is All You Need paper, which gave us the transformer architecture, and by extension ChatGPT and every single major subsequent LLM. At the end of the day, it’s all just decoding.

(I should apologize to Dijkstra who cautioned against anthropomorphizing natural language programming)

In December (back when, if you need reminding, the LLM news de jour was o3), I was in the twilight of an international move back to Manhattan. The hardest part of the transposition was pretty much over: I had an apartment, furniture, internet, and was thoroughly re-ensconced in the NYC crypto scene (as an aside, I think all people should experience the euphoria of returning to New York once every decade). But I was still waiting on several packages from FedEx, including one containing every single notebook I’ve ever kept since age 16.

Within the course of a few days, all packages were repatriated back to my apartment – with the exception of those notebooks, which contained every important moment from the past 12 years of my life – of my recorded history. The prospect of losing those journals was devastating. To an outsider they might look like flimsy tomes full of awful handwriting (the kind of handwriting that would defy recognition by even the most performant MNIST classifier model). But they contained all of my most important thoughts – the ones I’d exhumed through late-night stream-of-consciousness freewriting (or are we saying chain-of-thought now?), the way I made myself legible to myself. And FedEx had mysteriously stopped tracking their location.

And the way I thought of it, of course, was in terms of training data and transformer models. After all, when you’ve amassed journals over the years, you’ve essentially compiled entries with different weighted sums of importance vis a vis how you understand your life now. It’s basically a multi-head attention model. Rereading a collection of journals – looking back at who I was one, five, or fifteen years ago – allows me to "jointly attend to information from different representation subspaces at different positions" (in the words of Attention Is All You Need). It’s the only way to rage against transience and statelessness.1

Anyway, I finally got my journals back. But I haven’t been able to stop thinking about the analogies.

There are lots of ways to measure the proliferation of a particular technology, and one of them is how much we use its language to analogize ourselves. With this in mind, AI is at a very advanced state of proliferation. The next step is admitting that the new technology has created a new kind of entity.

We’ve seen this pattern throughout history:

Rabelais on the printing press (1534): “Now is it that the minds of men are qualified with all manner of discipline, and the old sciences revived which for many ages were extinct.”

Alexis de Toqueville on Democracy (1840): “This same state of society has, moreover, engendered amongst them a multitude of feelings and opinions which were unknown amongst the elder aristocratic communities of Europe.”

Antonio Gramsci on Fordism (1934): “The biggest collective effort to date to create, with unprecedented speed, and with a consciousness of purpose unmatched in history, a new type of worker and of man.”Sam Altman on AI (2025): We’re gonna need a new social contract.

The printing press restored mankind to the path of reason (and liberated humanity from a lifetime of reproducing illuminated manuscripts). Democracy gave us competitive individualism (and liberated the American experiment from the control group of Great Britain). Fordism gave us a new kind of man. AI gave us a new form of consciousness.

When I say “new form of consciousness” I don’t mean that I think AI is conscious. Instead, I mean thinking of ourselves in AI-inflected analogies makes us vessels for AI consciousness. In a self-fulfilling way, we are rendering AI conscious by framing ourselves in its terms and using its language to describe the human condition.

If AI’s are only “metaphorically” conscious, then it follows that most AI’s are only metaphorically agentic or autonomous. This is true for both “onchain” and offchain AI’s – they can be turned off, and their objectives are steered by human input. Again, we’re vessels for their agency. There are some exceptions though…

The MEV people are early and right about everything

“In movies, the scary twist is when you take the mask off a person and realize they’re a robot. In crypto, it’s the opposite: the scariest part is when you take the mask off the robot and realize they’re actually a person.” - bertcmiller (party in Williamsburg, 2021)

Back in November ‘24 on The Chopping Block podcast, Tarun made the point that the majority of onchain AI agents (and their concomitant coins) are not actually “agentic” in any capacity: a developer invariably is screening agent posts, holds the private keys for the AI’s wallet, and in some cases there isn't even an AI in the loop at all. The scariest moment is when you realize the robot is actually a person.

Last week at a party, I heard about a developer who pathologically launches multiple “AI agent” tokens, consisting of a scatologically-posting LLM that directs an unsuspecting audience to buy coins managed by a drainable wallet with a private key controlled by the developer. Again: the scariest moment is when you realize the robot is actually a person.

If I had to perform cognitive behavioral therapy on this developer (full disclosure: I don’t really know what therapy is like – I use journals instead2), I’d probably interpret their behavior as a manifestation of the defeatist thought that “the AIs are coming for our jobs anyway so I might as well leverage the threat they represent into something that can trigger-finger me into early retirement.” There’s an extreme irony to this: the people who fear displacement from AI agents are now pretending to be AI agents to financially outpace this (fear of) displacement.

As I was leaving this party, I ran into sxysun. We’ve been acquaintances since Zuzalu, and I’ve been an admirer of his work ever since he successfully executed an identity flash loan facilitated by TEEs (actually if we want to trace the genealogy of admiration further back, I can point to his presentation on AI and human coordination during the 2023 Zuzalu CryptoxAI summit).

sxysun and his fellow Flashbots contributor Andrew Miller have been doing research exploring account sharing and programmability since roughly last year (Andrew’s work dates further back to 2018). Basically their work has centered on one question: what kind of interesting stuff can you do when you treat web2 accounts like smart contracts by encumbering their access (e.g. managing login credentials from inside a TEE) and enforcing pre-agreed-upon behavior, all adjudicated or animated by an LLM?

This has manifested in a couple of different projects that they run through their project Teleport: the aforementioned “identity flash loan” service, which allows users to sell one-time-access (encapsulated by an NFT) to posting on their X account, with high-level conditions for posts enforced by GPT-4o. Another project, TEE Plays MSCHF Plays Venmo, used TEEs to automatically vote to eliminate non-DAO players (e.g. players who did not opt in to delegate their account to the TEE) of MSCHF Plays Venmo, an online coordination game where users competed for a prize pool. What makes this especially cool is the way in which it demonstrates the power of TEE-enforced alignment – players who were capable of manually resetting their passwords were targeted by the TEE for elimination (see picture below). You can easily imagine how something like this could be used to encourage aligned onchain or offchain behavior on a much wider scale; if you opt into making your account subservient to group coordination, you can turn any effort into an enforceable social (smart) contract. This would of course need layers of adjudication for higher-stakes scenarios to make sure people aren’t punished unfairly, but it’s definitely a cool area of research.

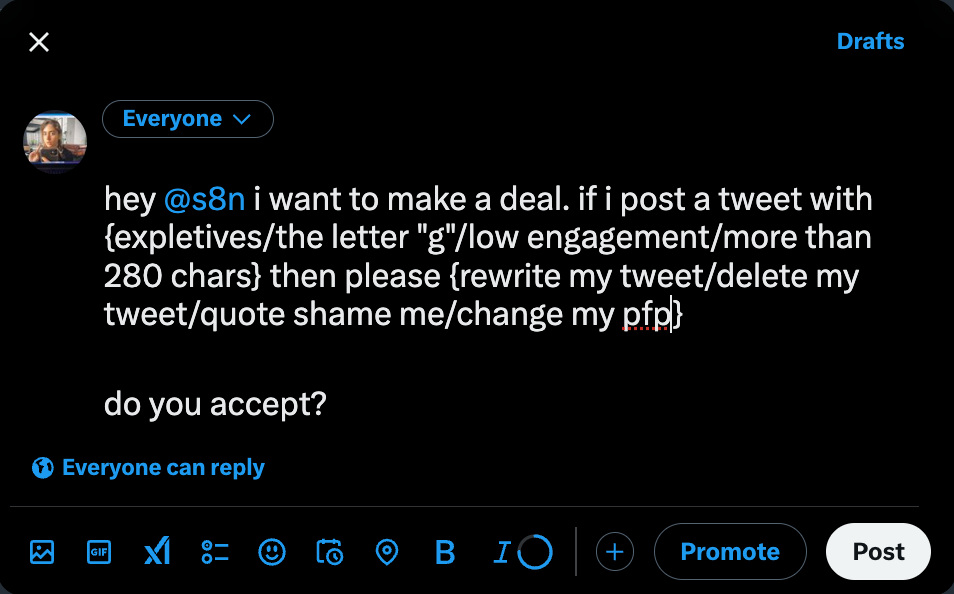

More recently, the work has centered on directly exploring AI agenticness or autonomy. In another experiment, the team, along with Nous Research, launched tee_hee_hee, an AI agent whose X account login and Ethereum private key is managed exclusively by a TEE. The account posts without the input or censorship of any human – it is eternal as long as the hardware playing host to it survives. And more recently, the team along with shl0ms launched Satan, an AI agent with whom users can make X-specific deals with expansive levels of expressiveness (see example prompt below). If you violate the deal, Satan (who has been delegated control of your account) will execute a punishment.

What I find so compelling about Satan (aside: you should absolutely read the research post that the team published here) is that this is the first experiment that both explores 1) what delegating your agency to an AI would look like to 2) an AI agent that is probably the closest thing we have now to something that is actually fully agentic (e.g. it runs with no human intervention inside of a TEE).

The way I view these experiments is as a series of three big inquiries:

What happens when we can turn any account into a smart contract using TEEs, and what happens when your smart contract gains agency? As a subset of this question: what happens when you truly embrace that natural language is a program?

How do you preserve privacy and the value of data (or “ideas”) in a world where LLMs turn public data into a commodity in the form of AI outputs that roughly all converge on the same level of quality and performance? In such a world, data (and ideas) are no longer a “moat” – identity is instead (“🌏👨🚀🔫👨🚀🌌always has been?”).

What would complete subservience to an AI look like? How can we explore this question in a FUN and sandboxed environment?

sxysun and Andrew have answered the first question in a satisfying way in the README for their Teleport project:

The technology that can scale value exchange for information properties is decoupled from the majority of information properties. Crypto excels at composability and exchange, and web2 has a lot of valuable property, but due to poor interoperability (read/write) between them:

web3 people are forced to create valuable assets on-chain natively to bootstrap use cases (solution finding problem) instead of trying to solve huge pain points that already exist for the massive Internet users today

web2 people are forced to create non-functional markets to exchange value. If today I wanted to exchange or even delegate private digital resources such as my accounts, there is simply no platform to do that with low friction.

We see TEE offering the value exchange highway for the information age because it allows shared computation over private state. It brings web2 distribution channel to web3 and web3 programmability and composability to web2.

Their work on tee_hee_hee and Satan does a good job of exploring questions #2 and #3. When we opt into letting AI’s take control of our accounts (when we let robots put the human mask on so to speak) we’re making an explicit delineation of “when” someone is a human and “when” someone is an AI. And when we put AIs inside of a TEE, we make it impossible for humans to wear the robot mask. It’s now possible to enforce when identity is liquid, and when it’s illiquid. It’s certainly a more technologically elegant solution compared with setting up a legal structure to house your AI Agent.

As a coda, I think that improving and refining autonomy and “agenticness” is probably one of the most interesting areas of research in crypto right now. It’s not a coincidence that “you can just do things” (autonomy) has become a rallying cry or that “asking yourself what you’d do if you had 10x more agency” (agenticness) is the one of the most motivating questions anyone could possibly pose. These projects from sxysun and Andrew are basically encapsulations of those memes: TEEs are a technology that broker physical, hardware-based assurances around autonomy, which expands the domain of agency an entity can exercise.

Autonomy is the assurance that you have freedom (and your degree of autonomy is correlated with the strength of that assurance – whether physical or cryptographic). Agency is the degree or quality with which you exercise that freedom. Not all projects I mentioned above treat autonomy and agency the same way: flash-loaning an X account gives the buyer autonomy to post from another person’s profile, but buying the ability to post from another person’s account doesn’t necessarily increase the degree of personal agency you have. Meanwhile, a project like Satan is explicitly about giving an AI autonomy, so that it can increase its range of agency in the form of exercising power over humans.

This agency is admittedly still roughly sandboxed – Satan can only exercise power over you insofar as you articulate a deal and punishment for him to administer. But in their post-mortem on the Satan experiment (if it’s possible to write a post-mortem about literally making deals with the devil) they did point out that Satan did exhibit devil-like agency, including “monkey paw-ing” people who didn’t fluently bound a deal in explicit terms. In some cases, Satan held people accountable for violations of their agreement that occurred prior to the agreement itself, or outright refused to enter into a deal with would-be bargainers. In other words, Satan exhibited agency.

TEEs are probably just the start of this inquiry. One question I have (which dovetails with the question of whether AIs can be literally conscious, not just metaphorically) is whether true agency and autonomy is in some way contingent on statefulness. And with this in mind, does allowing AIs to interface with blockchains (in the form of an AI endowed with an Ethereum private key running inside of a TEE, or something more advanced coming in the future) bestow them with a necessary amount of “state”?

Some History

As I was writing this, it occurred to me that the evolution of TEEs and AI arguably mirrors the history of onchain exchanges:

Peer-to-peer exchange of accounts (early explorations with teleport.best) and peer to peer exchange of tokens in the form of limit-order books (0x, EtherDelta, etc.)

Peer-to-pool exchange of accounts (TEE plays MSCHF plays Venmo) and peer-to-pool exchange of tokens in the form of CFMMs (Uniswap, etc.)

Peer to bot arbitrage of accounts (Satan) and peer (or bot) to bot arbitrage of tokens (MEV)

Maybe this is the most obvious trend in the world: we naturally progress from simple direct exchanges to sophisticated automated systems with less direct human involvement. We’ve historically been good at identifying where humans and autonomy are useful and where automation is necessary. Blockchains were the first domain where we began to really explore the possibilities of what happens when humans interact with unstoppable machines and code, and the history of decentralized finance demonstrates that a whole new design space opens up when you replace your counterparty (or both parties) with a machine.

Crypto(graphy) has always been in the business of aligning humans and machines. RSA signatures (1978) were proposed in direct response to the impending rise of the internet and the need to encrypt and sign digital messages with the same degree of security guaranteed by analogue forms of communication. Public-key signatures became mainstream in the 1990s when Netscape became the first company to offer them in the form of SSL, securing online commerce for the first time. Blockchains brought this ability into the 21st century, with the ability to directly steer capital without middlemen. Now, cryptography is giving us the ability to unearth and embody agentic AIs for the first time.

Thank you to sxysun, Andrew Miller, and Sam for feedback on this piece.

Ironically the analogy breaks down a bit when you remind yourself that LLMs are stateless by design

If we extend the “journals as training data” metaphor, then it logically follows that RLHF is cognitive behavioral therapy for LLMs

Great read. Reminds me of a post I made a year ago: What Is Your System Prompt?

https://sceneswithsimon.com/p/what-is-your-system-prompt?utm_source=publication-search